2023, the year of “zero-ETL”

With a little delay compared to its competitors Microsoft and Google, AWS has proclaimed that 2023 will be a "zero-ETL" year. What does that mean and what does it change for us? It essentially means that performing analytical analysis on our production data has never been so simple! Explanations.

The essential data warehouse: why should we not query our production database directly for analytical analysis?

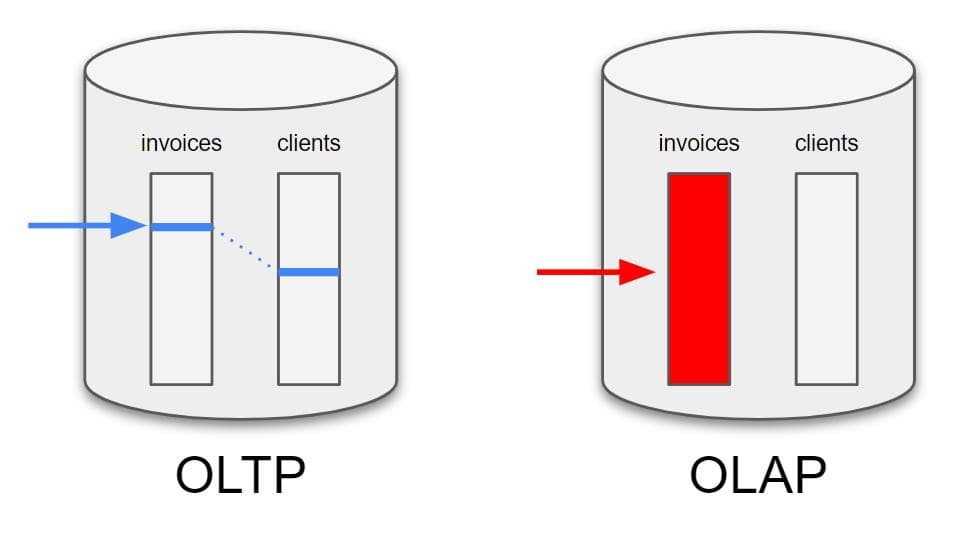

Because analytical-type queries (OLAP*) are of a very different nature from our good old traditional SQL queries (OLTP*). In general, reads performed on a relational database are very fast and require little disk access, thanks among other things to the advanced mechanisms of indexing and data joins.

For example: "What is the phone number of the customer of invoice #43584."

The query is almost instantaneous because the query engine knows exactly where to read the information on the hard drive, starting from the invoice number and then the customer ID.

Now imagine that we want to know the invoice with the highest amount: in this case the query engine has no choice but to scan the entire invoices table, looking for the highest amount. This is an OLAP-type query.

If the invoices table is 10GB, it is easy to imagine that this type of query can greatly impact the performance of the database.

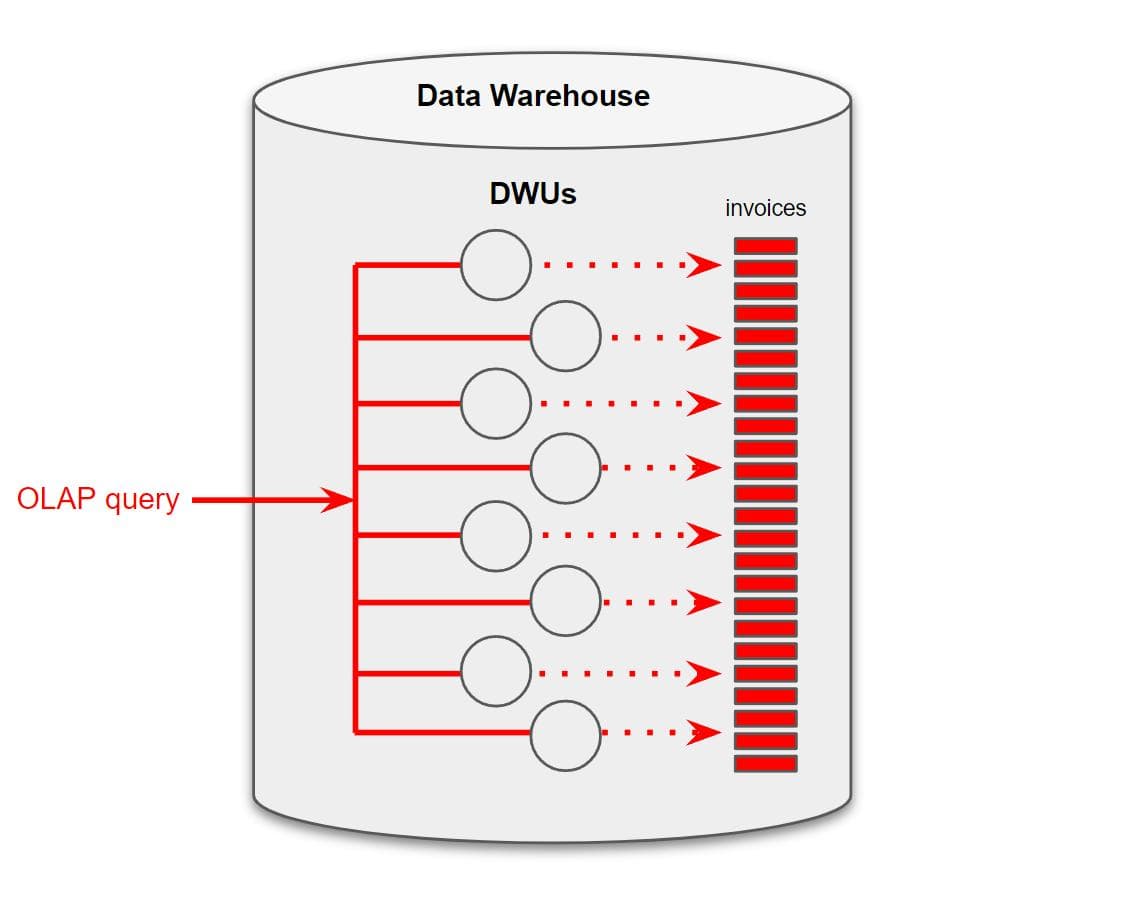

That's why we invented data warehouses! A data warehouse is a relational database optimized for OLAP queries. The principle is simple: divide to conquer. The database is divided into a multitude of small fragments that will be read in parallel by as many compute nodes (Data Warehouse Units), thus multiplying performance.

Until today, using Redshift, AWS's data warehouse, required some work: it involved regularly extracting data from the production database (either in real-time streaming or in batch via an S3 data lake) before loading it into Redshift. This is actually an ETL (Extract, Transform, Load) operation that is necessary to feed the data warehouse.

AWS 2023: a direct connection between RDS Aurora and Redshift !

AWS announced in December 2022 the integration of the SQL database RDS Aurora into Redshift, all in full serverless and without any ETL operation.

This means that in a matter of seconds, you can instantiate a Redshift that is able to synchronize itself, and in almost real-time, with your production SQL database. It then becomes possible to perform OLAP-type SQL queries on your data in real-time and without impacting your production performance. All of this without deploying any data architecture or data lake, and while enjoying fully managed scaling. Hallelujah!

From zero-ETL to Python notebook

The AWS teams did not stop there, as they also announced the integration of Apache Spark with Redshift. Apache Spark should be seen as a notebook (Python or SQL) boosted with steroids. Spark is based on a parallelization principle in which data is partitioned and stored in RAM on dozens or hundreds of compute nodes. It is therefore possible to create and load a massive amount of data into a dataframe (similar to that of Python Pandas) and take advantage of the comfort and simplicity of a Python (or SQL) notebook, beloved by data scientists, for exploratory data analysis.

By transitivity, RDS to Redshift to Spark, AWS therefore gives us the opportunity to explore our production data in almost real-time from a simple notebook, without having to deploy any instances or even crawling.

PROs of zero-ETL:

almost real-time replication of the production database

on-demand use of Spark with adjustable capacity

a significant gain in time and money: no development is necessary!

CONs:

does not allow for data transformation: no cleaning, denormalization or star schema...

Despite this important last limitation, we are witnessing a major advancement in the democratization of data. I would not be surprised to see this year an explosion of the number of data projects based on the RDS/Redshift pair. As for Spark in full serverless mode, and its integration with Redshift (but also with AWS Athena!), it is already a small revolution in itself.

*: OLTP (online transaction processing) / OLAP (online analytical processing)