MCP servers and Apps SDK: the AI ‘App Store’ is up and running

Table of contents

- Introduction

- A technological revolution in the vein of the App Store?

- What is an MCP server? Or the art of connecting AI to the real world

- Why is everyone getting involved? A strategic challenge for AI platforms

- Use cases already within reach

- Towards a reinvented (and proactive) user experience

- How to prepare for the MCP wave?

- Conclusion: there will be a tool for everything – and it's time to act

Introduction

We live in an era where technological innovations are coming thick and fast. Seventeen years ago, Apple's App Store revolutionised the way mobile applications were distributed, opening up a whole new world of opportunity for many products and services. Today, a transformation of comparable magnitude is on the horizon in the field of generative artificial intelligence. Its name? MCP, which stands for Model Context Protocol, more commonly known as MCP servers or AI connectors. These connectors could well become for AI what the App Store was for mobile: a thriving ecosystem paving the way for new uses and new sources of revenue.

In this article, we will discover what MCP servers are, why they represent a strategic turning point for AI platforms (and for user companies), and what concrete use cases are already available to us. We will also see how these connectors herald a new user experience – smoother and more proactive – and how to prepare for it now. Professional yet light-hearted, this article is aimed particularly at product managers and decision-makers who want to stay one step ahead.

A technological revolution in the vein of the App Store?

A quick look back at 2008

At the time, the iPhone had just launched its brand new App Store, offering a single portal for downloading mobile applications. BlackBerry still ruled the smartphone market, but Apple was able to turn an innovation (mobile applications) into a sustainable business model and a unique distribution network. The success was meteoric: a billion active devices and tens of billions of dollars in revenue generated via the App Store in just a few years. Suddenly, ‘there was an app for everything.’ A new platform was born, bringing with it a host of uncharted territory on which to build products and services.

Fast forward to 2025

Language models (LLMs) such as ChatGPT and Claude have reached hundreds of millions of active users (around 700 million weekly users for ChatGPT[1]) – unprecedented for a software product. However, the monetisation of these AIs remains below what one might expect from such an installed base (approximately £10 billion in annualised revenue for OpenAI, the publisher of ChatGPT[2]). In comparison, Apple and Google derive much more value per user from their mobile ecosystems. Why such a discrepancy? Mainly because our current AI assistants are isolated: they excel at generating text, code or images, but still operate in a vacuum, with no direct link to our everyday tools or the online services we use.

This is where the concept of AI platforms comes in. Current competition between AI players is largely based on model performance (GPT-4 vs Claude vs Llama 2, etc.). But the next battle will be fought over the ecosystem: in other words, the ability to connect these models to everything around us. MCP servers are precisely the technological building block that enables these connections. If we dare to make the comparison, they are to AI what applications were to smartphones: a means of considerably expanding the scope of uses. So much so that some people are already talking about a future AI App Store built around connectors.

Before diving into concrete examples, let's clarify what lies behind the acronym MCP and how it works.

What is an MCP server? Or the art of connecting AI to the real world

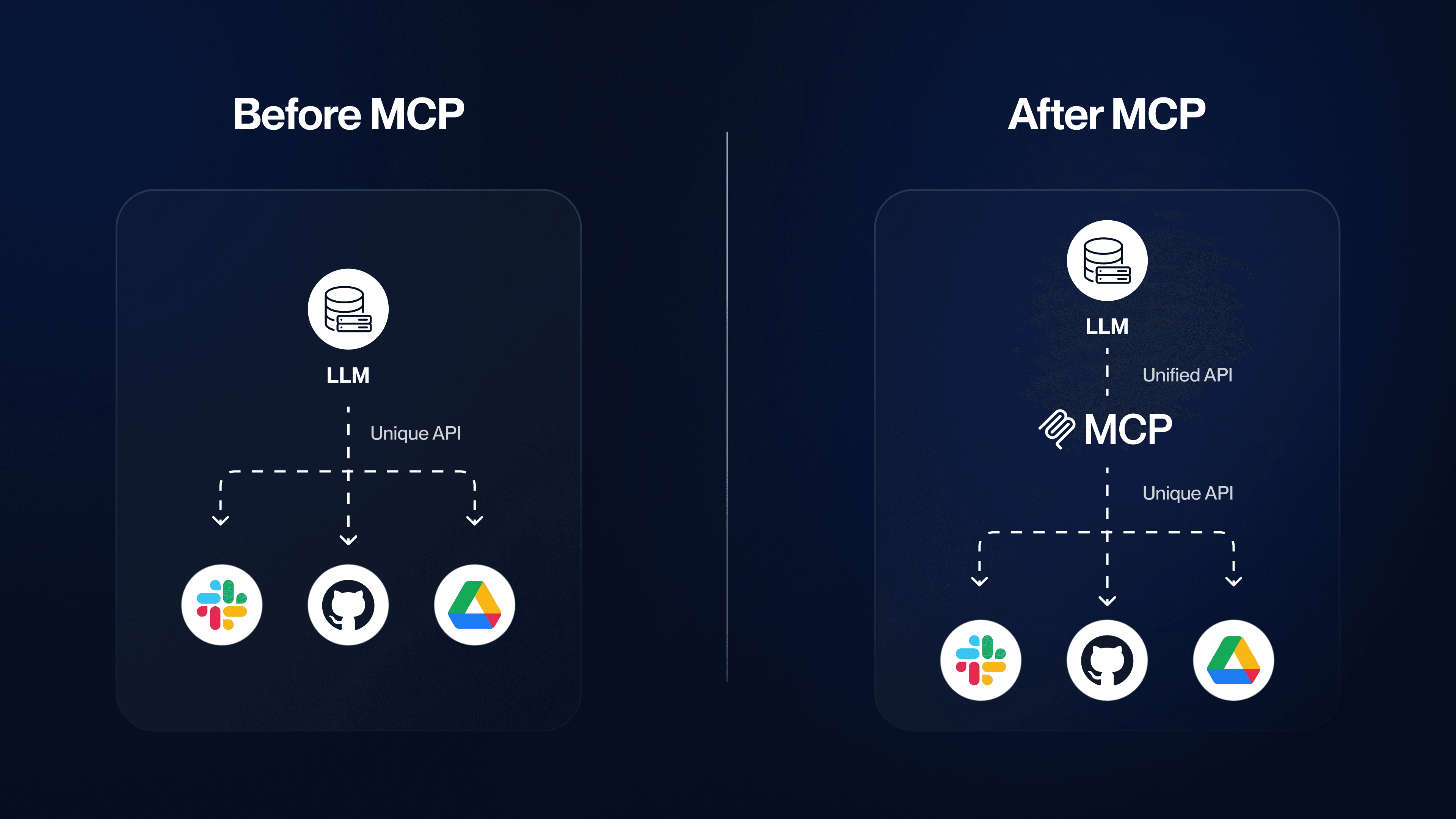

An MCP (Model Context Protocol) server is a standardised connector that allows AI (language models or conversational agents) to connect to external systems, whether databases, SaaS tools, internal applications, etc. Introduced as open source at the end of 2024 by Anthropic (Claude's publisher), this protocol is intended to be universal and open. Anthropic describes it as the ‘USB-C port’ of artificial intelligence[3], a telling metaphor: just as USB-C has unified connections between electronic devices, MCP aims to unify the way AI connects to various digital resources.

In practice, an MCP server is an API (server interface) that exposes certain system functionalities in a form that can be understood by AI. Specifically, MCP allows three types of building blocks to be exposed to the model: Tools (tools for executing actions), Resources (data returning structured information) and Prompts (ready-to-use instruction templates)[4]. For example, a Gmail connector could offer a tool for sending emails, while a Google Drive connector could provide a resource for searching and reading the content of a document. Prompts are essentially pre-configured scenarios that guide the AI in certain complex tasks.

The great advantage of the MCP protocol is that the AI itself decides when and how to use these connectors. Once a particular tool has been connected to it, the model will know how to choose the right tool at the right time based on the user's needs, without any additional human intervention. This provides unprecedented fluidity: no more tedious copying and pasting between ChatGPT and your other applications!

Use case: Let's imagine that I ask my AI assistant: ‘Send the Q3 report to the whole team’. Naturally, the model does not have this report in mind. Thanks to the MCP connector, it can perform several actions in sequence to complete the task: first, it uses the find_file tool from the Google Drive connector to find the document, then it calls the send_email tool from the Gmail connector to prepare an email with this file as an attachment. With a single request in natural language, the result is achieved: my email is ready to go, without me having to search through Drive or open Gmail myself. The AI orchestrated everything behind the scenes, via standardised calls to the Drive and Gmail APIs.

What was once a DIY project (endless prompt chains, custom integrations for each service) thus becomes a reliable, standardised and maintainable process. Better still, security is enhanced: instead of sending all your data to the model, the MCP server allows you to filter and transmit only the information that is strictly necessary. The connector can be hosted in your own environment, ensuring that sensitive data remains under the company's control while allowing AI to access it in a targeted manner.

In summary, MCP provides AI with universality and context: any service or dataset can become an extension of AI, in a plug-and-play manner. Technically, it relies on standardised JSON exchanges (JSON-RPC) between an MCP client (AI side) and the MCP server (external service side). But for the product manager or user, these details fade into the background behind a simple promise: your favourite AI will be able to use your favourite tools.

Why is everyone getting involved? A strategic challenge for AI platforms

We understand the appeal for users, but what about the major players in AI (OpenAI, Google, Anthropic, etc.)? Why are they pushing these MCP connectors? The answer can be summed up in one word: economics.

Let's go back to our comparison with the App Store. Apple didn't just improve the iPhone user experience by offering apps; above all, the company created a lucrative ecosystem from which it captures a share of the value. Each transaction on the App Store typically earns Apple a 15-30% commission, not to mention the fees charged to developers to publish their apps and advertise on the platform. As a result, in 2022, the App Store ecosystem accounted for more than £1 trillion in total sales (across all sectors), including approximately £104 billion in digital goods and services alone. Even though more than 90% of this gross revenue goes to developers, that still leaves tens of billions in Apple's pockets every year. The lesson here is that controlling the distribution platform means getting a (large) slice of the pie.

Today, OpenAI and its peers find themselves in a somewhat paradoxical situation: their models are used extensively, but much of the value created escapes their control. A telling example: when a company integrates GPT-4 via the API to improve its application, OpenAI only receives the computing fees (the famous tokens) – an amount that is often negligible compared to the subscription price that the end user pays for the application in question. Similarly, if you use a mobile service based on ChatGPT, Apple/Google will take its commission on the app, while OpenAI will only receive a micro-payment for the requests processed behind the scenes. Frustrating, isn't it?

MCP servers offer AI providers a chance to turn the tables. By attracting users to their own interface (e.g. the ChatGPT or Claude app) to consume various services via connectors, OpenAI and others can finally capture added value. How? In the same way as Apple: by taking a commission on transactions made within their ecosystem. OpenAI makes no secret of this: in September 2025, the company launched an integrated payment feature called Instant Checkout on ChatGPT, in partnership with Etsy and Shopify, where ‘merchants will have to pay OpenAI a fee on each completed purchase’[9][10]. The news was immediately welcomed by the markets, with Etsy and Shopify shares rising 4-7% following the announcement[11][12]. This is a clear signal: conversational commerce is coming, and it is opening up a new direct revenue stream for AI platforms. But beyond financial transactions, there is also a battle for adoption. Historically, when a platform offers developers a new playground, it secures a lasting lead. Microsoft did it with Windows, Apple with the App Store, Salesforce with its AppExchange, etc. OpenAI, Anthropic, Google: everyone wants to become the central hub where users, developers and businesses converge. Even if it means that in the future, we no longer go to a service's website or mobile app, but instead use ChatGPT or Claude to access it... just as we no longer necessarily install a web service's PC programme, but instead use a browser. In short, promoting MCP connectors is a way for AI giants to build user loyalty (as users will no longer need to leave the platform) and monetise these third-party uses (through commissions, sponsored promotion, etc.). It is also a way to catch up if they fall behind: an AI platform that is technically inferior could compensate by offering the richest ecosystem of connectors. Conversely, a current champion could be dethroned if it neglects this dimension. The history of technology is full of dinosaurs that became obsolete because they failed to embrace a new wave... remember Nokia in the post-iPhone era?

E-COMMERCE & RETAIL : HOW TO SELL WITH CONVERSATIONAL AI?

- Key trends in conversational commerce and changing purchasing behaviours

- MCP, Apps SDK and ACP protocols and new standards for the agentic web

- Risks to anticipate (control, security, platform dependency) and their mitigations

- Use cases and architecture examples

- A concrete roadmap for moving from an initial priority flow to industrialisation

Use cases already within reach

OK, so it all looks promising on paper, but in practical terms, what more will we be able to do with MCP servers? The most honest answer is probably: an infinite number of things we haven't even thought of yet. However, let's look at a few telling examples – some already being tested, others very easy to imagine – that show the potential of these connectors in various sectors:

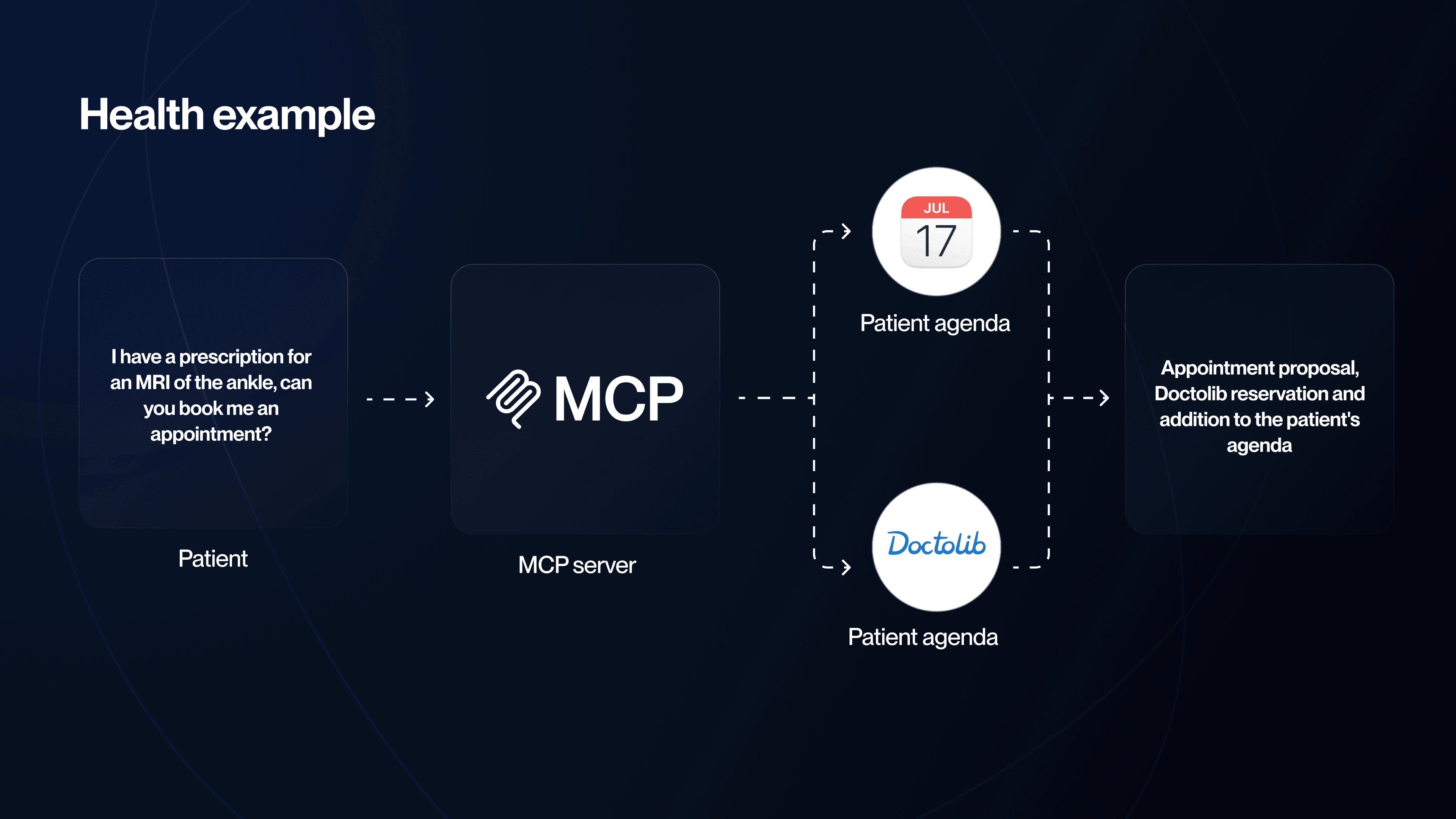

Health and wellbeing:

Many individuals use ChatGPT or Claude to obtain medical information or health advice (with all the precautions that this entails). In the future, a Doctolib connector (or other medical appointment booking service) could be integrated into the assistant. You describe your symptoms, and the AI responds but also suggests, ‘How about making an appointment with a general practitioner? Dr. Martin is available on Monday at 3 p.m.’ In one sentence, your appointment is made, without going through the app or external website. Similarly, a telemedicine connector could allow the AI to directly initiate a teleconsultation if necessary.

Travel, leisure and e-commerce:

This is undoubtedly the area where the immediate benefits are most apparent.

Imagine planning your holiday through a natural conversation: ‘I'm looking for a destination in Southern Europe, for my family, with beaches and cultural visits, budget £2,000’. AI could use a Booking.com (or Expedia, AirBnb, etc.) connector to compare offers, display a few relevant hotels or rentals with photos, and then – once you've made your choice – book the trip directly. No more juggling ten tabs: the assistant becomes your personal travel agent.

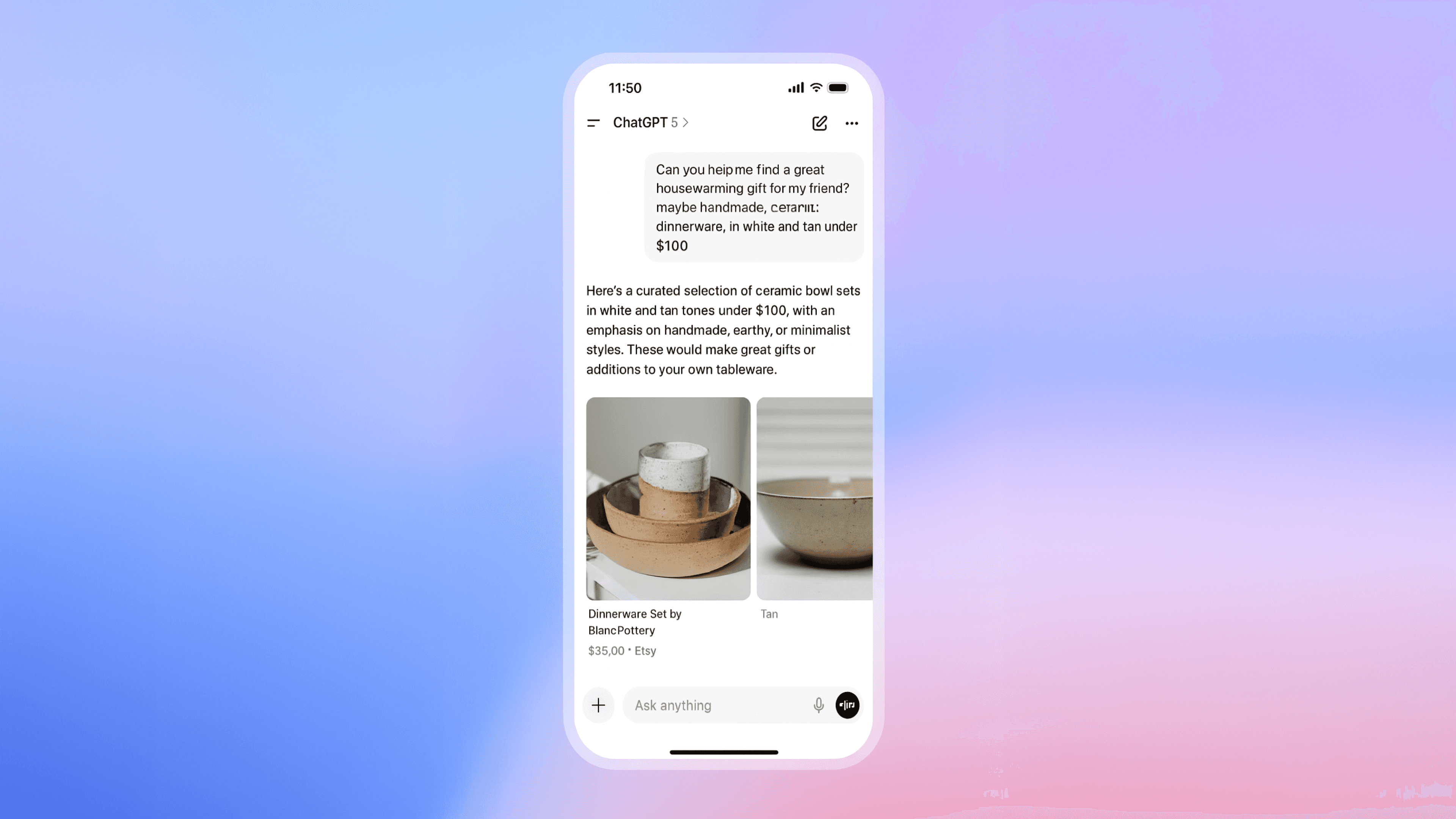

OpenAI has already begun rolling out features along these lines: via Shopify and Etsy, ChatGPT can now display relevant products during a shopping search and allow you to purchase them on the spot, including payment, without leaving the chat[13][14]. This is just the beginning: other partners are joining the fray, and multi-item shopping baskets are announced for the near future [15][14]. It is easy to extrapolate to booking concert tickets (via a TicketMaster connector), buying clothes (Zalando or Zara connector), etc. Your AI assistant will not only be able to advise you, but also complete your purchases* with just a few voice or text commands.

Retail and food:

Shopping could become much easier. You discuss recipes and menus for the week with AI, which generates an optimised shopping list for you. A Carrefour or Auchan connector then takes over: with a single click (or sentence), your basket is filled at the supermarket drive-through, ready to be confirmed. No more time spent browsing virtual shelves; the AI knows your food preferences and can even suggest alternatives if a product is unavailable. Again, everything happens in conversation.

Sport, education, productivity:

There is no shortage of areas of application. A runner could request a personalised training plan; their assistant would draw on their Garmin data (via a connector) to determine their level, then create a tailored programme, automatically synchronised with their smartwatch. A student could use a Wikipedia or university library connector to obtain verified references for their research, rather than relying solely on the model's internal knowledge. Finally, a professional would have their AI assistant access their calendar, files, Slack, etc., to summarise the latest company news every morning and prepare for upcoming meetings.

In a nutshell: wherever there is data to be retrieved or actions to be performed in connection with software, an MCP server can add value. AI becomes a conductor capable of coordinating a multitude of services to serve us.

Towards a reinvented (and proactive) user experience

Integrating external services into a conversational agent will not only multiply its powers; it will also change the way we interact with it. Until now, ChatGPT and its counterparts have mainly communicated in plain text. With their new connectors, they will be able to present us with real mini-interfaces within the conversation itself.

Imagine, for example, that you are searching for ‘the best running trainers under £100’. Rather than returning a text list, the assistant could display a visual carousel of products (photos, names, prices) thanks to an e-commerce connector. You could refine your selection using buttons (e.g. sort by popularity, filter by size), then press ‘Buy’ directly in the chat. This is exactly the kind of experience that OpenAI has begun to roll out with its Shopify/Etsy partnership: from discussion to payment in just a few taps, with AI acting as a personal shopper[16][17]. For the user, it's transparent and seamless; for the seller, it's an unexpected additional sales channel.

Beyond the visual aspect, these conversational platforms will become increasingly proactive. A true assistant does not just wait to be asked for help: it anticipates your needs. In the future, your intelligent agent could send you notifications in the morning to brief you on the day's important tasks: "🕘 Good morning, Céline. You have three urgent emails (I've prepared draft replies ready to be sent), two meetings this afternoon (here are the related documents) and the weather forecast is predicting rain – remember to take an umbrella. Have a great day!" This scenario is not futuristic: technically, everything already exists to make it happen via connectors (email, calendar, weather, etc.). All that is needed is for the interface to support it. In fact, some pioneering applications (Perplexity AI, for example) offer daily summaries of the news or your information feeds. We can bet that ChatGPT, Claude and others will soon have their own version of the personalised ‘morning brief’.

In short, thanks to MCP connectors and a few interface developments, conversational AI is evolving from a fun but passive toy to an intelligent and proactive co-pilot. This naturally raises questions about experience (how much should we trust it? How can we avoid overuse?) and ethics (how can we guarantee the neutrality of suggestions if brands are behind certain connectors?). These challenges will have to be addressed, but they are unlikely to slow down adoption, given the clear benefits for users.

How to prepare for the MCP wave?

For product managers and decision-makers, the rise of MCP servers raises two key questions: ‘Should our product join this ecosystem?’ and ‘How can we best integrate it?’ Here are some points to consider so you don't miss out on this emerging revolution.

Assess the relevance for your business

Not all services will necessarily lend themselves to conversational integration (at least not immediately). Analyse your use cases: is your application/project based on data or actions that could be triggered by a simple natural language instruction? If so, an MCP connector will bring real added value by eliminating friction for the user. Conversely, if your added value lies mainly in a complex visual interface or human expertise, MCP may be less important. Prioritise according to the potential impact.

Think of MCP as a product in its own right.

Creating an MCP server is nothing more than developing a new interface for your service—just like a mobile app or public API. This deserves dedicated product consideration: what features should be exposed? What conversational scenarios should be supported? How can you ensure that the AI agent makes the best use of your tool? For example, a connector for a booking service may need to include its own recommendation or filtering logic to avoid overwhelming the user with irrelevant results. You will also need to consider security and performance: limiting calls or returned data, managing user authentication through AI, etc. The good news is that developing an MCP connector is generally less complex than coding a complete native application – existing APIs are often reused. And since the standard is open, the same connector can work with multiple AIs (ChatGPT, Claude, etc.), increasing its ROI.

Keep an eye on developments in the ecosystem.

We are still in the early stages of this movement. MCP connectors are still hidden in experimental menus, documentation remains technical, and conversational interface standards (buttons, lists, integrated forms) are just beginning to emerge. This situation is somewhat reminiscent of the wild west days of the App Store: a blank slate waiting to be structured. Expect rapid changes: terminology (we will probably talk more about ‘AI tools’ or ‘conversational apps’ than MCP servers in the future), SDK improvements, the emergence of connector marketplaces well integrated into AI applications (imagine a kind of store where you can browse and install extensions for your assistant with a single click – it's coming soon). Keep an eye out for announcements from the major players, and why not contribute to the open-source communities that are shaping the protocol?

Anticipate the impact on your digital strategy

If conversational assistants become a major gateway to online services, you may need to rethink some of your priorities. Tomorrow, ‘being well referenced’ could mean not only appearing at the top of Google, but also being the preferred connector for a given AI query. This raises the question of the content provided to AI via your connectors: a hotel may want to carefully craft the description it presents to the agent to maximise its chances of being recommended. Similarly, customer relations/branding may possibly go through the AI intermediary: how can you maintain your brand identity when the user interacts via ChatGPT? These are all issues to consider in your strategic thinking right now.

In short, if your product or service speaks AI (or rather speaks to AI), it will remain in the running. Otherwise, it may quickly appear outdated to users who are accustomed to conversing with their intelligent assistant.

Conclusion: there will be a tool for everything – and it's time to act

In 2008, the unofficial motto for mobile phones was ‘There's an app for that!’ In 2025, we may well find ourselves saying more and more: ‘There's an AI tool for that.’ MCP servers are paving the way for a world where every digital service can become a piece of the puzzle that is our personal assistant. This is an exciting development that will simplify many uses and create new ones.

For businesses and product visionaries, now is the time to get started. As with the mobile and cloud rushes in their day, the early birds will be the first to benefit. Creating an MCP connector for your service is an opportunity to be at the forefront of the next wave of technology, to learn quickly, and perhaps even to get a head start on your less agile competitors.

At BeTomorrow, we are closely monitoring these developments and are already helping our clients take advantage of them. Are you wondering about the possibilities offered by MCP connectors in your sector? Are you considering developing an MCP server for your product and want support from technical and business experts? Contact us today! We would be delighted to work with you to devise innovative solutions for integrating your services into the AI ecosystem of tomorrow and ensuring that your business takes full advantage of this ongoing revolution. Together, let's lay the groundwork for your future success – in a world where artificial intelligence, more connected than ever, will be an integral part of your users' daily lives.

Sources : OpenAI, Anthropic, Reuters, VentureBeat, La Revue IA[2][9][4][5] (voir liens pour plus de détails).

[1] [2] ChatGPT rockets to 700M weekly users ahead of GPT-5 launch with reasoning superpowers | VentureBeat

[3] [4] [5] [6] Comprendre le protocole MCP (Model Context Protocol) - La revue IA

https://larevueia.fr/comprendre-le-protocole-mcp-model-context-protocol/

[7] [8] Developers generated $1.1 trillion in the App Store ecosystem in 2022 - Apple

[9] [10] [11] [12] OpenAI partners with Etsy, Shopify on ChatGPT payment checkout | Reuters

[13] [14] [15] [16] [17] Buy it in ChatGPT: Instant Checkout and the Agentic Commerce Protocol | OpenAI

https://openai.com/index/buy-it-in-chatgpt/